Kleptocracy is a term used to describe a system of government or state in which leaders or officials exploit their power to steal resources from the country they govern, often for personal gain. The word comes from the Greek words klepto (meaning theft) and kratos (meaning power or rule).

Some characteristic of kleptocracies:

Corruption

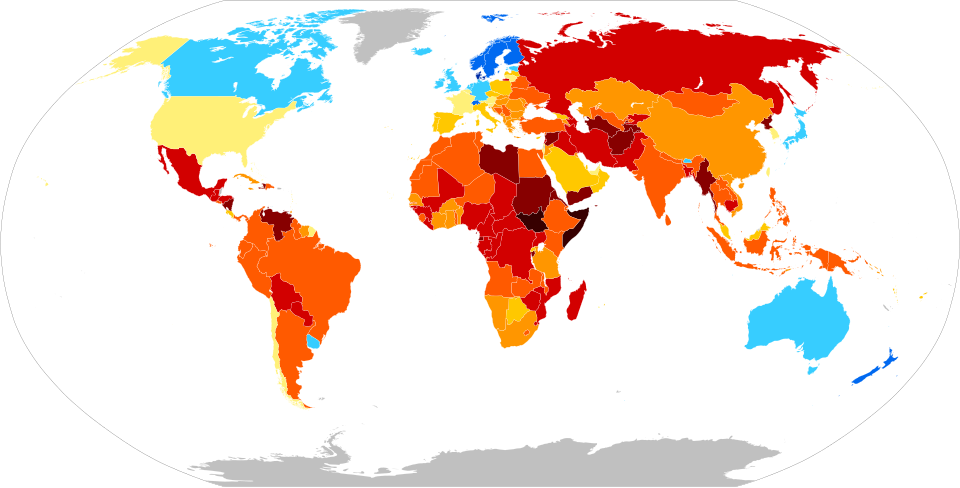

The rulers and officials systematically engage in stealing state resources. Corruption is usually so rampant that the ruling class enriches itself at the expense of the population, often leading to extreme inequality, poverty, and a lack of basic services for citizens. In 2024, the United States scored a 65 out of 100 in the Corruption Perceptions Index published by Transparency International. The world map, shown above, illustrates the corruption across the globe.

Exploitation of Public Office

Government positions are often used for personal enrichment rather than serving the public. Kleptocracy enriches not only high government officials, but a narrow class of plutocrats, who usually represent wealthy individuals and families who have amassed great assets through the usage of political favoritism, special interest legislation, monopolies, special tax breaks, state intervention, subsidies or outright graft.

Lack of Accountability

Often, kleptocratic leaders are above the law, using their power to silence opposition and evade prosecution. A kleptocratic financial system flourishes in the United States by illegally abusing the United States’ liberal economic structure for two reasons:

- The United States does not have a beneficial ownership registry, and kleptocrats take advantage of this privacy benefit.

- kleptocrats take advantage of incorporation agents, lawyers, and realtors to unknowingly launder their money.

In 2025, the United States scored a 3.9 out of 10 on the Opacity in Real Estate Ownership (OREO) Index.

Weak Institutions

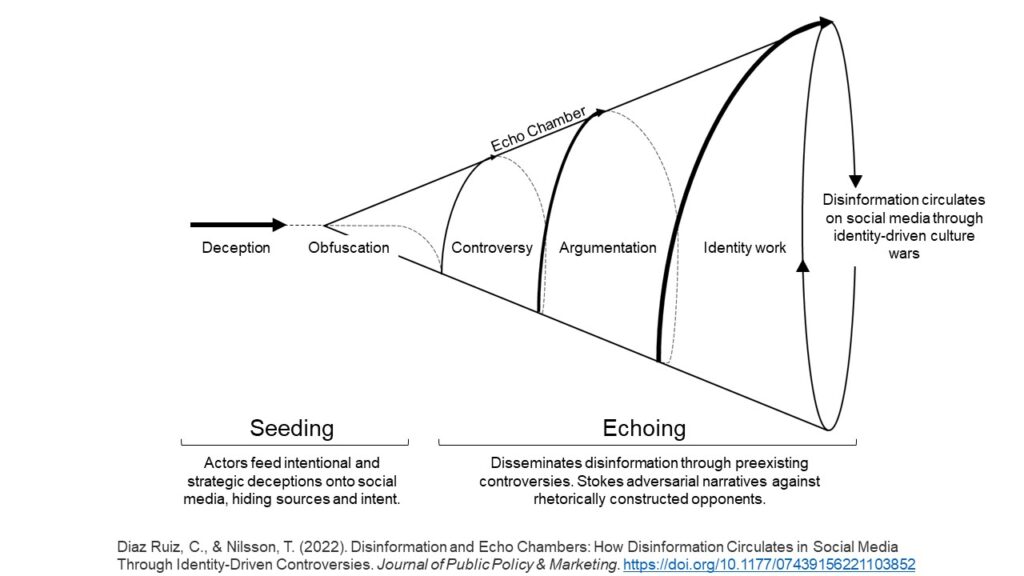

The legal and political institutions necessary to prevent corruption (like an independent judiciary or free press) are often undermined. As the judiciary becomes ineffective, the rule of law diminishes. As the free press is muzzled, disinformation covering the theft of assets becomes easier.

Examples of Kleptocracy

Russia

Under Vladimir Putin, Russia has been widely considered a kleptocracy, where state resources are siphoned off by the ruling elite, including oligarchs close to the president. There has been widespread corruption in state-owned enterprises, and political dissent is often suppressed.

Venezuela

The government under Hugo Chávez and his successor Nicolás Maduro has been accused of corruption, including embezzlement and the diversion of state resources, especially from the country’s oil revenues. This has contributed to the country’s ongoing economic and political crisis.

Nigeria

Nigeria, particularly under the leadership of military dictatorships like that of Sani Abacha, has experienced significant corruption. Abacha’s regime is notorious for looting billions of dollars from the nation’s treasury. Many Nigerian officials are accused of embezzling public funds, leading to severe inequality and underdevelopment despite the country’s oil wealth.

Equatorial Guinea

President Teodoro Obiang Nguema has been in power since 1979 and has presided over a regime where the country’s vast oil wealth is largely controlled by his family and close associates. Despite the nation’s oil riches, most of the population lives in poverty.

Zimbabwe

Under Robert Mugabe’s rule, Zimbabwe became a classic example of kleptocracy. Mugabe and his associates are alleged to have diverted large sums of state funds and resources to themselves, while the country descended into hyperinflation, economic collapse, and widespread poverty.

United States of America

Some have accused the U.S.A. of being a supporter of kleptocracy by providing a place to hide and launder plundered assets.

Others have gone further, evidenced by firing inspectors generals —an independent check on mismanagement and abuse of power within government agencies— and the lack of integrity rules in Congress and the Supreme Court of the U.S. allowing these officials to profit from their positions.

The current administration has removed or diminished many checks on kleptocracy in the United States.

Now the president seems to have no limits on his money-making schemes that profit him, his family, and political supporters from his position.